Scraping the WGU Catalog

In Part 1 of this 3-part series, we’ll scrape the WGU Institutional Catalog’s Instructor Directory section.

Introduction

WGU’s Institutional Catalog includes an Instructor Directory with over 1,000 faculty members, listing their degrees and alma maters. While the data appears tabular, small formatting errors and inconsistencies make direct analysis difficult.

This project converts 18 pages of semi-structured text into a clean, analysis-ready dataset by:

- Parsing the raw directory

- Normalizing 80+ degree variations into standardized categories

- Applying automated validation checks

The resulting dataset supports analysis of faculty composition and diversity, including questions such as: How do degree types vary across colleges? and What is the geographic distribution of WGU faculty?

Using Python (regex and pandas), I parsed the instructor directory, cleaned anomalies, and produced a normalized CSV as the foundation for the next two posts in this series:

- Part 2: Geotagging alma maters to map instructor origins

- Part 3: Building a searchable archive of faculty publications ___

Dataset Description

The WGU Institutional Catalogs are published nearly every month and archived on their website.

In the June 2025 issue, the Instructor Directory spans pages 313–331.

Each entry follows a basic pattern:

Last, First; Degree, University

Entries are grouped under college headers, but the text contains occasional anomalies and formatting errors that require cleaning before analysis.

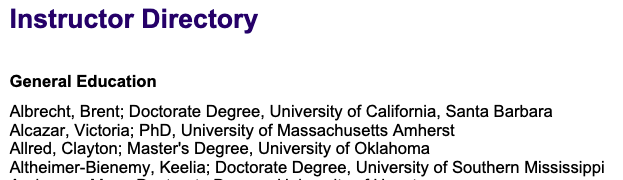

Catalog Screenshot:

The data isn’t perfect, occasional inconsistencies and formatting errors require cleaning before analysis.

Process Overview

The data extraction and cleaning process involved three main phases:

- Extract - Copy the 18-page instructor directory from PDF to plain text

- Parse & Clean - Handle formatting inconsistencies and data quality issues

- Normalize & Export - Standardize degree names and output analysis-ready CSV

Raw Data Extraction

The instructor directory spans pages 313-331 of the June 2025 catalog. I extracted the entire section as plain text: instructor_data_raw.txt

The structure follows a consistent pattern:

- College headers separate each section

- Instructor rows follow the format:

Last, First; Degree, University - Page footers contain copyright notices that need filtering

Example structure:

General Education

Adams, Sarah; PhD, Stanford University

Baker, Michael; Master's Degree, University of Utah

School of Business

Clark, Jennifer; MBA, Harvard Business School

Parsing & Cleaning

The parsing script walks through each line, detects college headers, and extracts instructor data. The semi-structured format required handling various formatting inconsistencies that would break naive parsing.

Core parsing logic:

catalog_headers = (

"Instructor Directory", "General Education", "School of Business",

"Leavitt School of Health", "School of Technology",

"School of Education", "WGU Academy"

)

footer_re = re.compile(r"^©\s*Western Governors University\b.*\d{1,4}$")

with open("instructor_data_raw.txt", encoding="utf-8-sig") as f:

current_college = None

for lineno, raw in enumerate(f, start=1):

s = raw.strip()

if not s or footer_re.match(s):

continue # skip blank/footer lines

if s in catalog_headers:

current_college = s

continue

# parse as instructor row: "Last, First; Degree, University"

process_instructor_row(s, current_college)

Common formatting issues handled:

- Periods instead of commas:

Clark. Traci; PhD, Stanford - Missing separators:

Victoria; PhD University of Michigan - Typos in degrees:

Master's's Degree, East Carolina University

Regex patterns and fallback logic catch these inconsistencies and flag them for tolerant parsing.

Validation logic:

To guarantee no data is lost, the script performs strict line-count validation. Every input line is classified (title, header, footer, blank, instructor, or other).

At the end, the script:

- Reconstructs the total from all categories and compares it to the expected document length (EXPECTED_TOTAL = 1159)

- Confirms that the sum of instructor counts by college matches the total instructor rows parsed

- Exits with an error if any mismatch occurs (useful for CI or reproducibility)

This ensures every catalog line is accounted for and every instructor row is captured exactly once.

Degree Normalization

The largest data quality challenge was inconsistent degree naming. The raw data contained over 80 unique degree variations like “Master’s Degree,” “Masters Degree,” “MA,” “M.A.,” and “Master’s’s Degree” (with the double possessive typo).

I created a normalization script that maps degree variations to standardized names, then groups them into four academic levels:

Normalization approach:

- Standardize names: “Master’s Degree” → “Master”, “PhD” → “PhD”

- Group by level: All master’s variants → “master”, all doctorates → “doctorate”

DEGREE_MAP = {

"Master's Degree": "Master", "MA": "MA", "MS": "MS", "MBA": "MBA",

"PhD": "PhD", "EdD": "EdD", "DBA": "DBA", "DNP": "DNP"

# ... 30+ more mappings

}

def infer_degree_level(standard):

u = standard.upper()

if u in DOCTORATE_TITLES:

return "doctorate"

if u in MASTER_TITLES:

return "master"

# ... etc

Results snapshot:

The cleaned dataset shows clear trends, such as the School of Technology having a noticeably higher share of instructors with master’s degrees compared to other colleges, an insight worth deeper investigation.

Instructor Alma Maters

The college field required minimal cleaning.

A full export is available here: colleges.csv, which lists each alma mater and the number of instructors from that institution.

university,count

Western Governors University,70

Capella University,70

Walden University,39

Next: WGU Instructor Atlas 2 — Geo Mapping

In Part 2, we’ll use geotagging to map instructor alma maters across the globe, showing the diversity of WGU’s faculty origins.