Project Summary

This project converts unstructured Reddit discussions about Western Governors University (WGU) courses into a structured feedback dataset.

Starting from approximately 27,000 collected posts, a staged pipeline identifies course-side pain points, aggregates recurring issues, and produces queryable tables for inspection and reporting.

Key outcomes

- 1,103 posts analyzed across 242 courses

- 156 within-course issue clusters identified

- 23 normalized issue categories

- SQLite database of structured artifacts

- static inspection site for exploration

The project demonstrates dataset construction from informal text, schema-validated extraction, benchmarked prompt refinement, and reproducible analytical workflows.

Problem Context

Students frequently discuss course experiences in public Reddit communities, but these discussions are fragmented, informal, and difficult to aggregate reliably.

This project converts informal student discussion into a structured dataset and analyzes recurring course-level issue patterns.

The analysis documents observable patterns in public discussion without making claims about course quality, student satisfaction, or institutional performance.

Dataset Construction

Collection funnel

- ~27,000 collected Reddit posts

- 1,103 posts retained in the analysis corpus

- 242 WGU courses represented

- 51 subreddits included

Filtering criteria:

- exactly one identifiable WGU course reference

- negative sentiment threshold (VADER compound < -0.2)

- structurally valid post text

A frozen Stage-0 dataset snapshot is used throughout the pipeline.

Counts represent posts, not students.

Dataset Characteristics

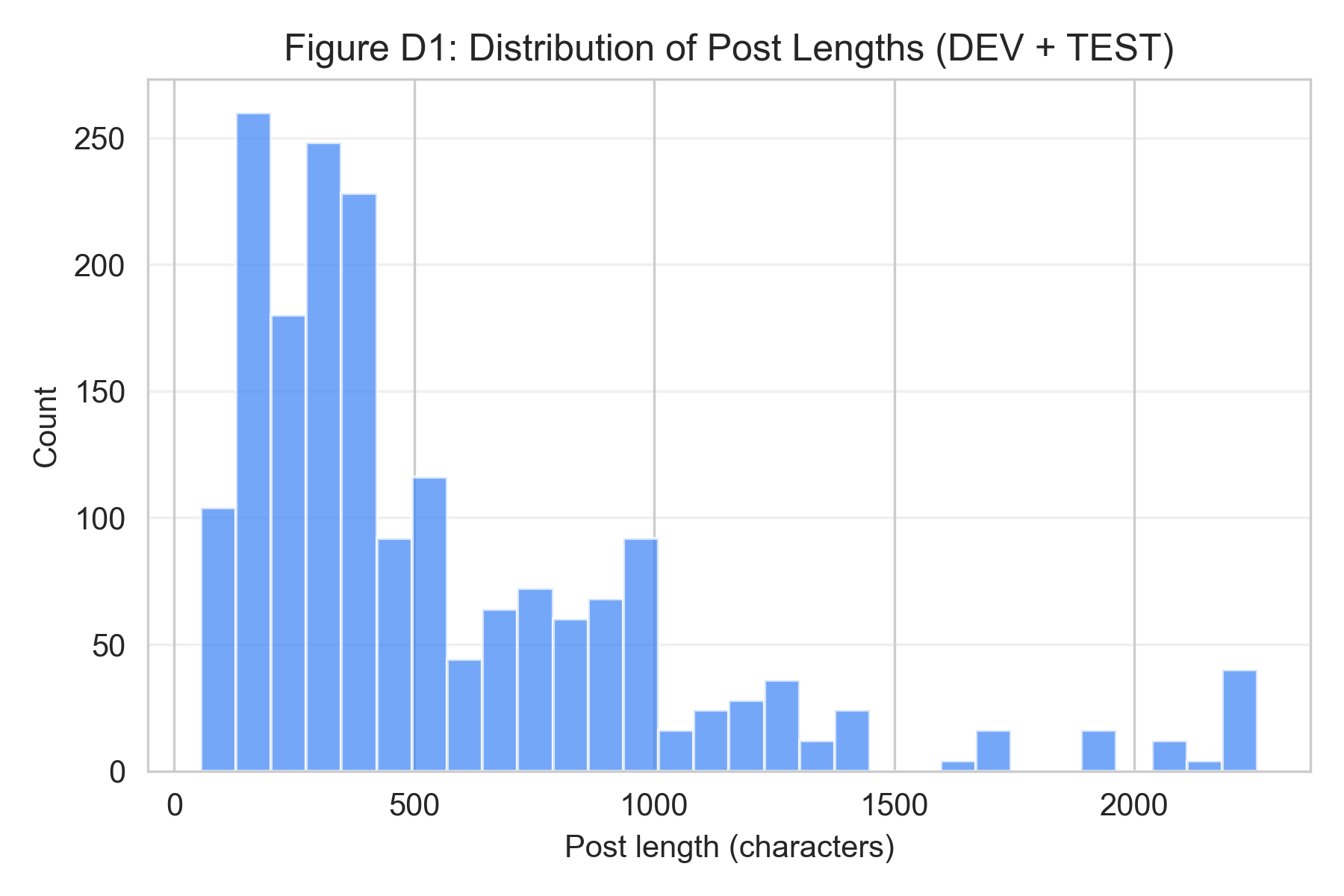

Figure - Post length distribution

Variation in post length reflects informal student writing rather than standardized feedback.

Technical Implementation

Tools

- Python (pandas)

- SQLite

- VADER sentiment filtering

- Pydantic schema validation

- GPT-5-mini structured extraction (Stage 1)

- static site generation

Final structured outputs are stored in SQLite tables that support SQL queries across courses, issue categories, and programs.

All intermediate outputs are stored as schema-validated artifacts.

Pipeline Architecture

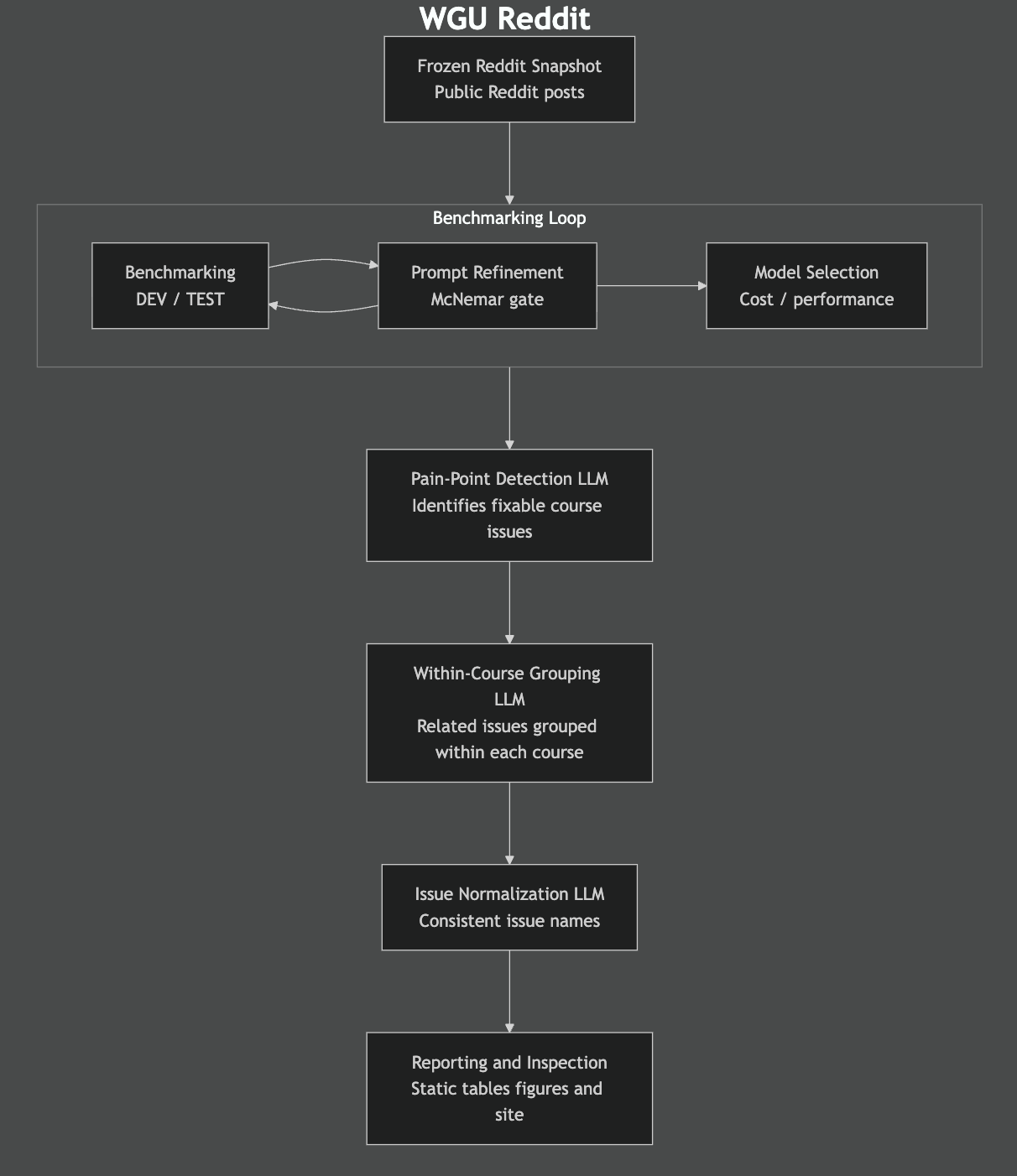

Figure - Pipeline overview

Staged workflow converting Reddit posts into structured feedback artifacts.

Stage 0 - Frozen corpus

Defines the stable 1,103-post dataset.

Stage 1 - Pain-point classification

Posts are labeled:

- y - course-side issue

- n - not a course-side issue

- u - unknown or invalid output

Structured fields are extracted for y-labeled posts.

Outputs are schema-validated and stored.

Stage 1 classification prompt

You are a course designer reviewing a Reddit post about a WGU course.

A "pain point" is a negative student experience clearly caused by how the course is designed, delivered, or supported

(materials, assessments, grading, instructions, pacing, support, etc.).

A "root cause" is the specific fixable course

issue that produces that pain point.

Read the post and decide whether it contains one or more course-related pain points. If there are several, list each

distinct pain point. Merge complaints that share the same root cause into one pain point.

Return exactly one JSON object with this schema:

{

"contains_painpoint": "y" | "n" | "u",

"confidence": number between 0.0 and 1.0,

"pain_points": [

{

"root_cause_summary": "...",

"pain_point_snippet": "..."

}

]

}

Rules:

• "contains_painpoint":

• "y": clearly at least one course-related pain point.

• "n": clearly no course-related pain point.

• "u": too vague or mixed to decide.

• If "contains_painpoint" is "y":

• For each item in "pain_points":

• "root_cause_summary": a few words from the designer's perspective (e.g., "practice test misaligned with final", "unresponsive instructors").

• "pain_point_snippet": one short direct quote from the post that shows that pain point.

• If "contains_painpoint" is "n" or "u":

• Set "pain_points" to [].

• "confidence": your confidence in the chosen label, where 1.0 = very certain and 0.0 = very uncertain.

Do NOT include any extra fields or wrap the JSON in Markdown.

Reddit post metadata:

• post_id: {post_id}

• course_code: {course_code}

Reddit post text:

{post_text}

Result: 847 of 1,103 posts (77%) were classified as containing fixable course-side pain points.

Stage 2 - Within-course aggregation

Pain-point posts are grouped into recurring issue clusters within each course.

Stage 2 aggregation prompt

You will receive social media posts about university course {course_code} - {course_title}.

Your task is to cluster the posts by their root-cause.

A pain point is a specific, actionable issue in the course's design, delivery, instructions, materials, or support-a friction that WGU can realistically fix or improve.

Do not group based on general frustration, motivation, or personal struggle.

Cluster only the actionable, course-side issues described in the summaries/snippets.

Your responsibilities:

1. Cluster posts by shared root-cause issue, from the perspective of WGU course designers.

2. Focus only on actionable pain points (unclear instructions, outdated materials, rubric mismatches, broken tools, missing resources, etc.).

3. Produce clean JSON only, strictly following the structure below.

4. Assign cluster_ids using the format: COURSECODE_INT (example: C211_1).

5. Sort clusters by largest size first.

6. A post may appear in multiple clusters if appropriate.

7. Output raw JSON only - no explanations, no commentary.

---

REQUIRED JSON STRUCTURE

{

"courses": [

{

"course_code": "COURSE_CODE",

"course_title": "COURSE_TITLE",

"total_posts": 0,

"clusters": [

{

"cluster_id": "COURSECODE_1",

"issue_summary": "short root-cause description",

"num_posts": 0,

"post_ids": [

"post_id_1",

"post_id_2"

]

}

]

}

]

}

---

EXAMPLE OUTPUT

{

"courses": [

{

"course_code": "C211",

"course_title": "Scripting and Programming - Applications",

"total_posts": 5,

"clusters": [

{

"cluster_id": "C211_1",

"issue_summary": "no instructor response for approvals or passwords",

"num_posts": 3,

"post_ids": ["1ci7efm", "1e12ncu", "1i5z4ev"]

},

{

"cluster_id": "C211_2",

"issue_summary": "OA result stuck, blocking progress",

"num_posts": 1,

"post_ids": ["1lu24vq"]

},

{

"cluster_id": "C211_3",

"issue_summary": "PA doesn't match OAs",

"num_posts": 1,

"post_ids": ["1m2hl4r"]

}

]

}

]

}

Result: 156 course-level clusters identified.

Stage 3 - Cross-course normalization

Course-level clusters are normalized into a global issue catalog.

Stage 3 normalization prompt

You will receive a flat list of Stage-2 course-level pain point clusters.

Each cluster has:

• cluster_id

• issue_summary

• course_code (input only - do NOT include in output)

• course_title (input only - do NOT include in output)

• num_posts

Your job is to merge these into cross-course global issues.

---

ROOT-CAUSE CLASSIFICATION RULES

• Group clusters by the fixable course-side root cause only.

• If a summary includes both a cause and a symptom (e.g., "wrong resource list causing confusion"), classify by the course-side cause, never by the student symptom.

• Student feelings (confusion, stress, frustration, delay) are not categories.

• Exclusive assignment: each cluster_id appears once in the final output.

---

USE SEEDED GLOBAL ISSUE TYPES WHEN THEY MATCH

Reuse these labels exactly when applicable:

unclear_or_ambiguous_instructions

assessment_material_misalignment

platform_or_environment_failures

missing_or_low_quality_materials

evaluator_inconsistency_or_poor_feedback

workflow_or_policy_barriers

missing_or_broken_resources

instructor_or_support_unresponsiveness

tooling_environment_misconfiguration_or_guidance

grading_or_answer_key_or_process_issues

workload_or_scope_issues

proctoring_or_exam_platform_issues

scheduling_or_simulation_availability_issues

ai_detection_confusion_and_false_flags

prerequisite_gaps_or_unpreparedness

external_tool_dependency_risks

If a cluster fits one of these meanings, reuse that issue.

Create a new global issue only if no seeded one matches.

---

SORTING RULE

Sort global_clusters by total num_posts per issue (sum across its member clusters), highest first.

---

OUTPUT FORMAT (STRICT)

{

"global_clusters": [

{

"course_issue_description": "string",

"normalized_issue_label": "string",

"member_cluster_ids": ["id1","id2"]

}

],

"unassigned_clusters": ["cluster_id_a"]

}

• No text outside JSON

• Do not include course_code or course_title

• Every cluster_id must appear exactly once

Result: 23 global issue categories.

Stage 4 - Deterministic reporting

Final tables and inspection site are generated from stored artifacts.

No LLM calls occur beyond normalization.

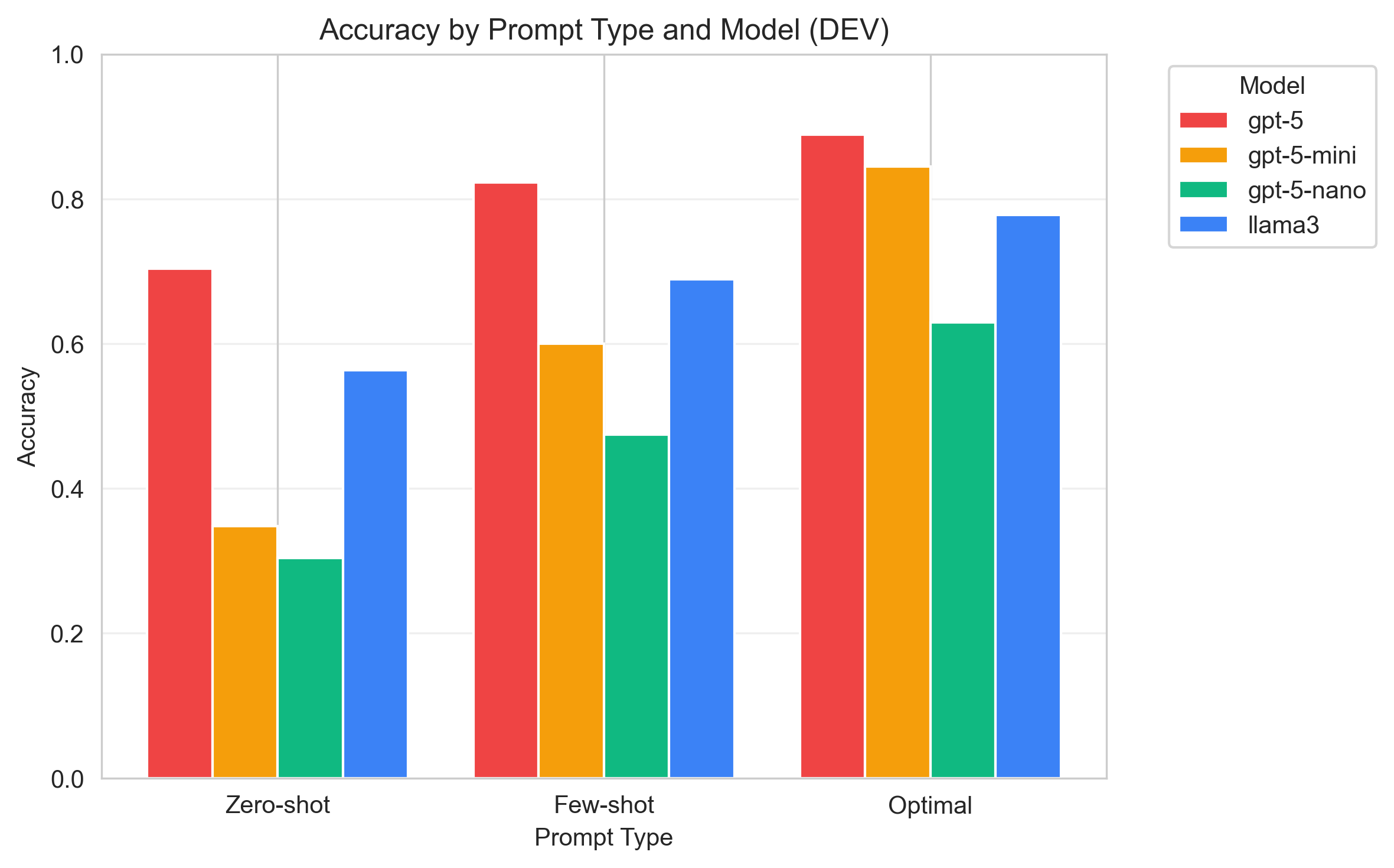

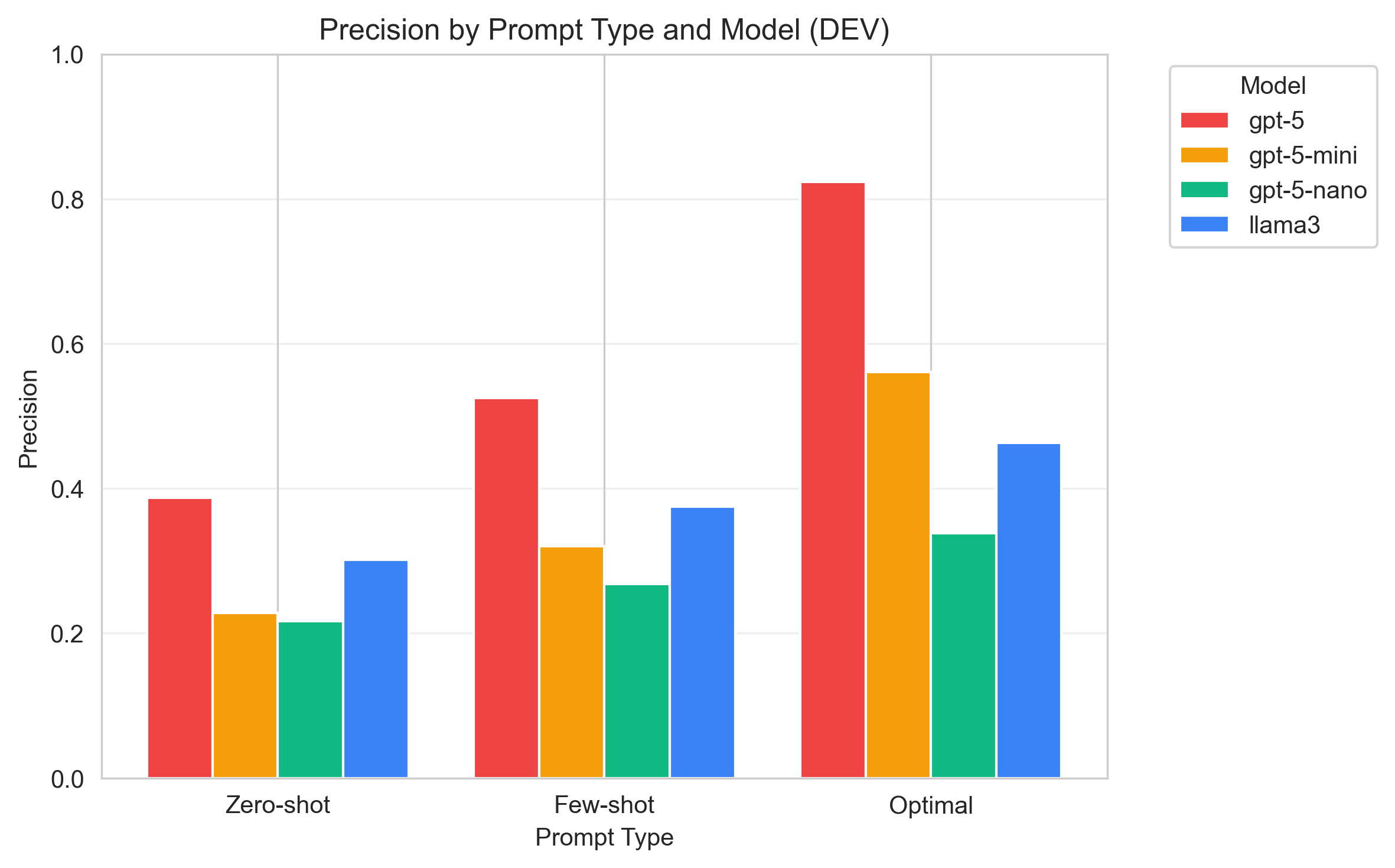

Stage-1 Benchmarking

Stage-1 classification is evaluated using a frozen DEV/TEST benchmark.

Metrics computed:

- TP, FP, FN, TN

- Accuracy

- Precision

- Recall

- F1

Prompt configurations are compared using paired predictions and McNemar’s test with continuity correction.

Prompt selection balances:

- paired statistical testing

- F1 score

- precision/recall tradeoffs

- false-positive and false-negative review

- cost and latency

The refined Stage-1 prompt produced statistically significant improvements in classification outcomes.

Benchmark Visualizations

Figure - Accuracy comparison (DEV)

Classification accuracy across prompt configurations.

Figure - Precision comparison (DEV)

Prompt refinement substantially reduces false positives.

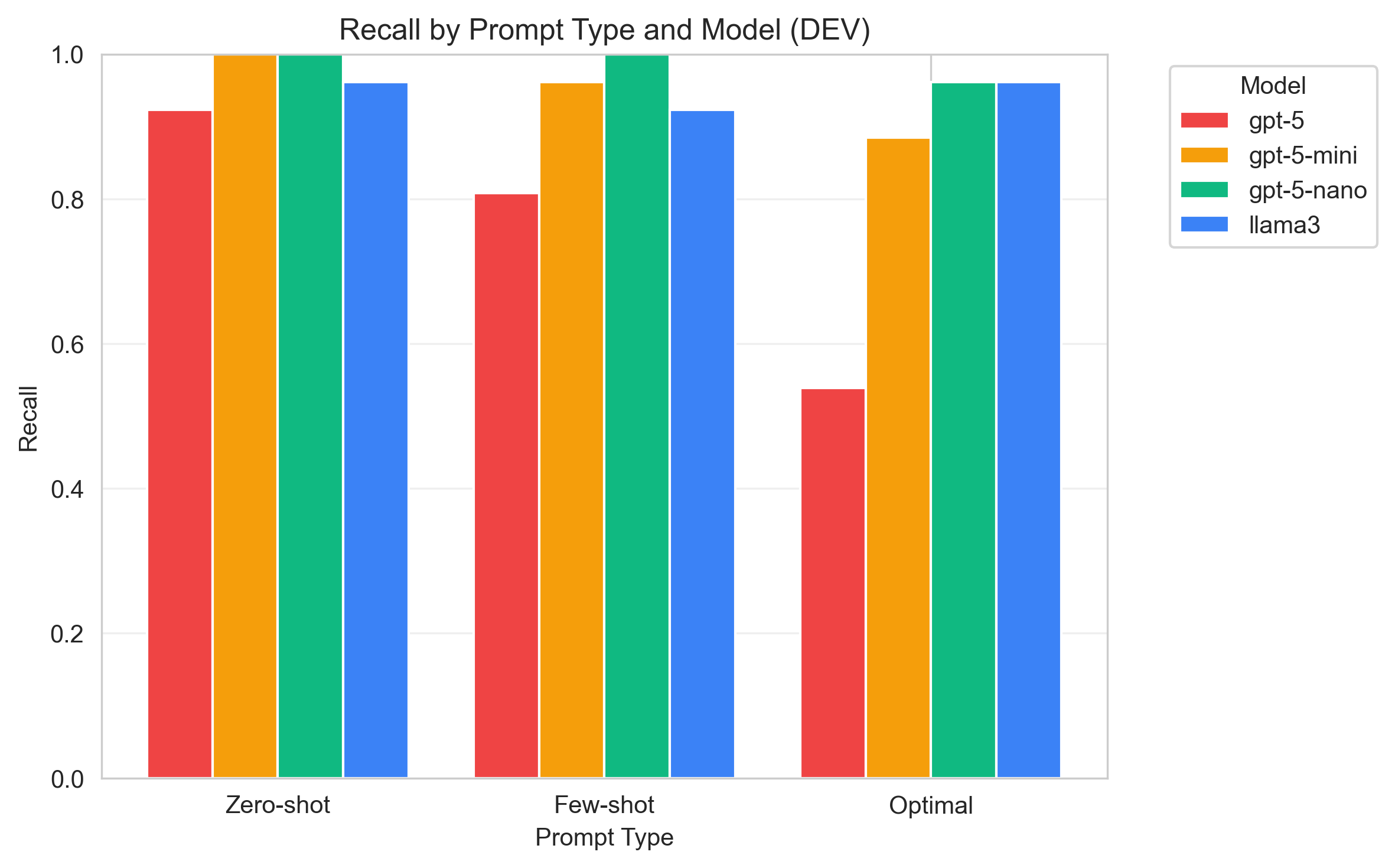

Figure - Recall comparison (DEV)

Recall tradeoffs reflect task ambiguity.

Figure - F1 comparison (DEV)

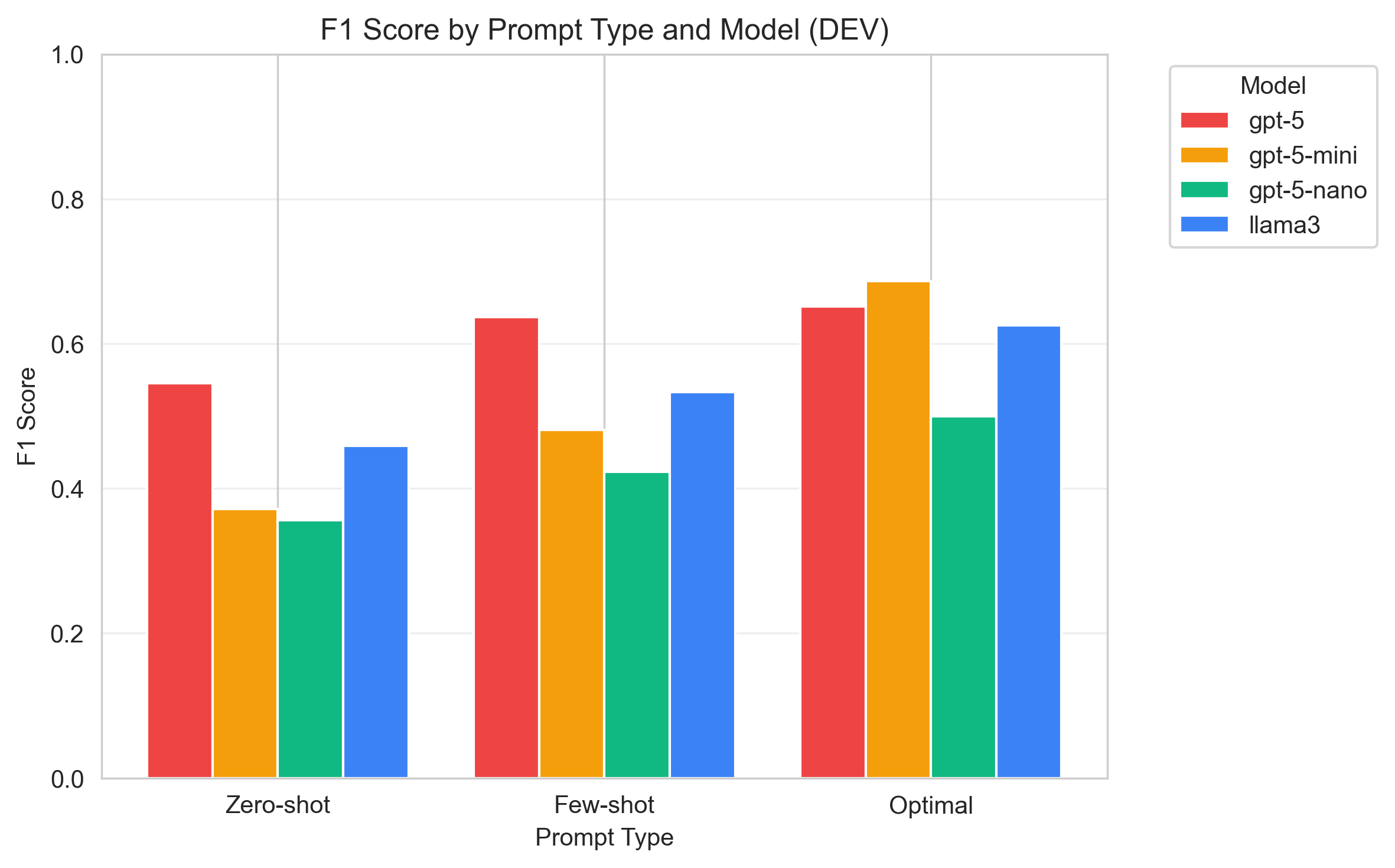

F1 is used as the primary summary metric for prompt selection.

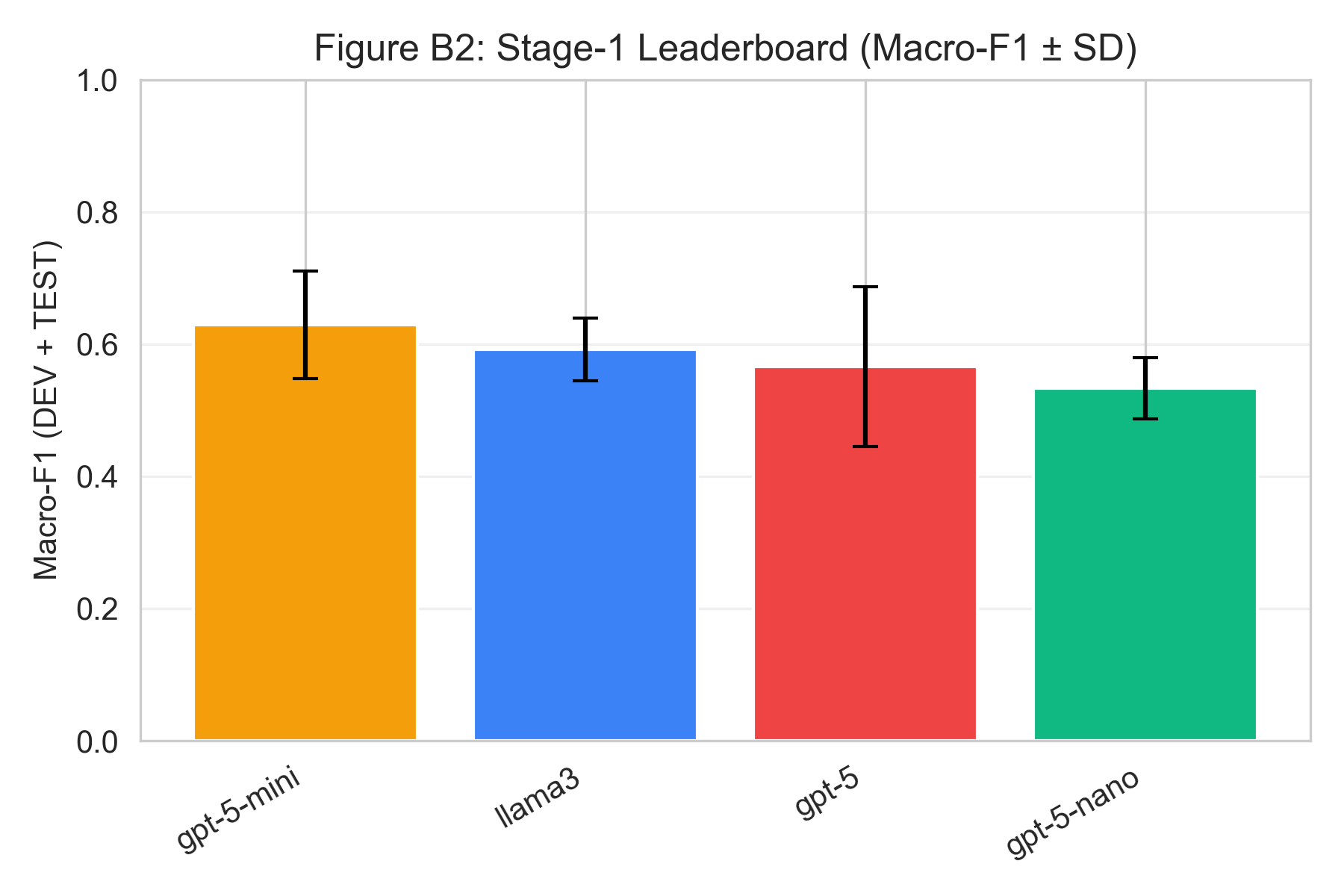

Figure - Model leaderboard (macro F1)

Comparison across candidate models.

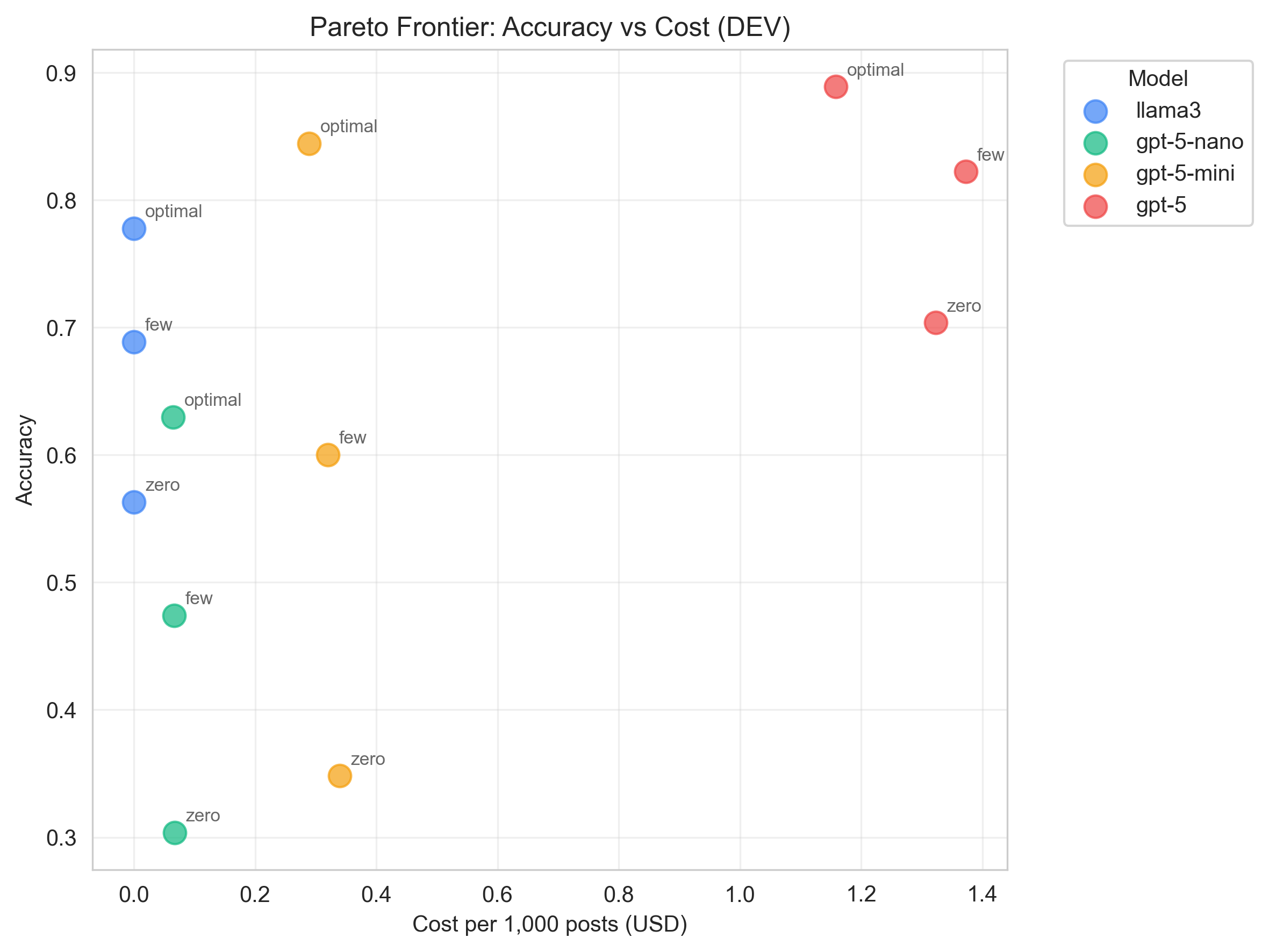

Figure - Accuracy vs cost tradeoff

Performance compared with estimated API cost.

Figure - F1 vs cost tradeoff

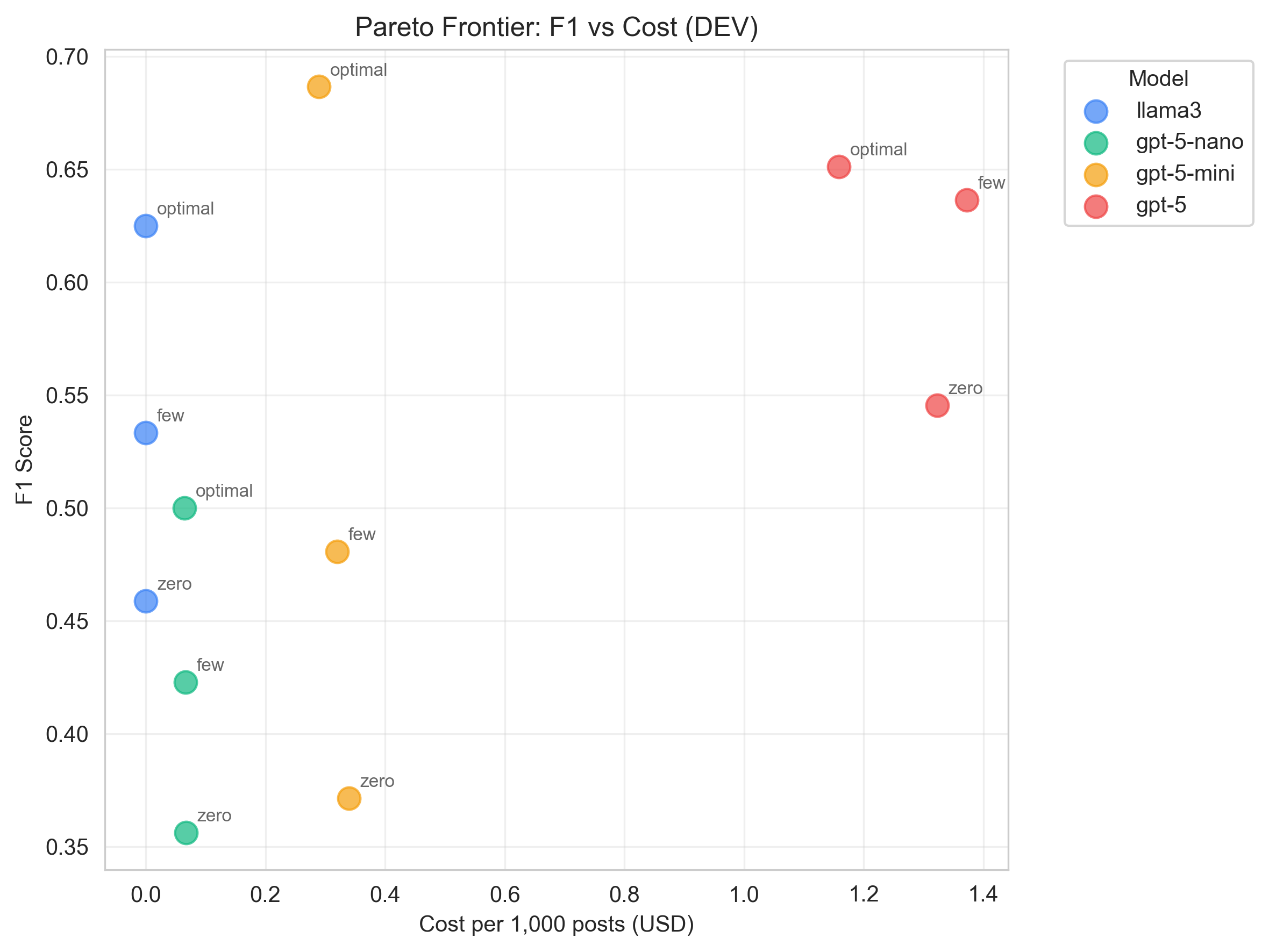

GPT-5-mini refined configuration provides strong performance at lower cost.

Key Findings

Across the analyzed corpus:

- Assessment clarity issues appeared across multiple programs and courses.

- Platform or tooling friction appeared in several technical courses.

- Prerequisite knowledge gaps were mentioned across disciplines.

- Issue patterns tend to repeat within courses rather than appearing as isolated posts.

Issue clusters varied widely by course. Some courses showed a single dominant issue type, while others showed multiple distinct issue categories.

These findings reflect recurring patterns in public discussion rather than institutional performance metrics.

Inspection Site

The project includes a static snapshot site that renders stored analytical artifacts.

Reviewers can:

- browse issue categories

- view contributing courses

- inspect excerpts

- link to original public posts

The site performs no computation and reflects a frozen pipeline run.

Limits and Interpretation Boundaries

- Public Reddit posts only

- Not representative of the student population

- Counts represent posts, not students

- Single-course requirement limits generality

- Sentiment used only as a corpus filter

- No causal inference or course-quality claims

This project documents recurring patterns observable in public discussion under explicit dataset constraints.

What This Project Demonstrates

- dataset construction from informal text

- schema-validated LLM extraction pipelines

- benchmark-driven prompt refinement

- staged artifact-based workflows

- SQL-queryable analytical outputs

- reproducible reporting pipelines

Full code and artifacts are available here: WGU Reddit Feedback Analyzer.